GENBOOST

Gallery for machine learning algorithms optimization

The task of the project:

Our task was to come up with an alternative way of neural network optimization

What we did and how we did it:

Based on PyGMO open framework, we wrote a code for testing out different meta-heuristic optimization algorithms for various types of deep architectures.

We considered weight optimization in the following architectures:

Fully connected – classification task on MNIST dataset

Recurrent – binary classification task on IMDB dataset

Convolutional classification task on MNIST dataset.

We conducted an exploratory research to find out about the most efficient ways of using evolutionary algorithms in machine learning.

The aim of the project:

Nowadays the methods of machine and, particularly, deep learning, are developing more and more. One of the most important stages of creating a deep learning model is its training. During this process, there may be some difficulties: gradient vanishing, gradient explosion, gradient descent to global maximum, which is completely different from the global one, etc. The difficulties are often caused by the nature of the gradient training method.

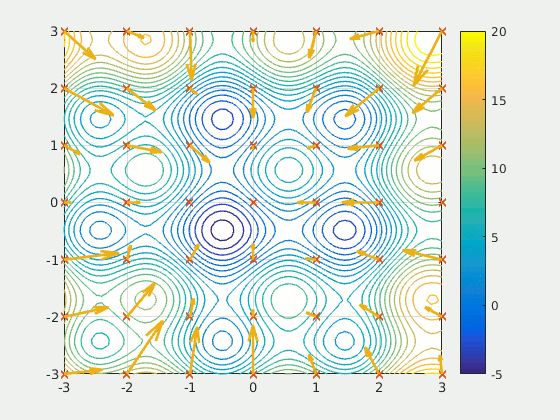

There are other training methods. We tried evolutionary and heuristic methods of finite dimensional optimization. The main aim of the project was to find the answer to the question: “Can heuristic optimization methods improve or take the place of gradient paradigm?”

Specifics of the project:

-This is a research project of the exploratory type.

-We worked with evolutionary algorithms

-We tried to improve the processes of deep learning on a deep level.

Time-frame: 3 months

Year of the project implementation: 2019